Cloud Workload Protection (CWP) refers to the security of workloads running in the cloud in any type of computing environment, e.g. physical servers, virtual instances, and containers. CWP is a relatively new term in the market. It’s also one of the only cloud security technologies that requires a third party solution since it’s not commonly offered by the cloud provider, making it a core responsibility for cloud consumers.

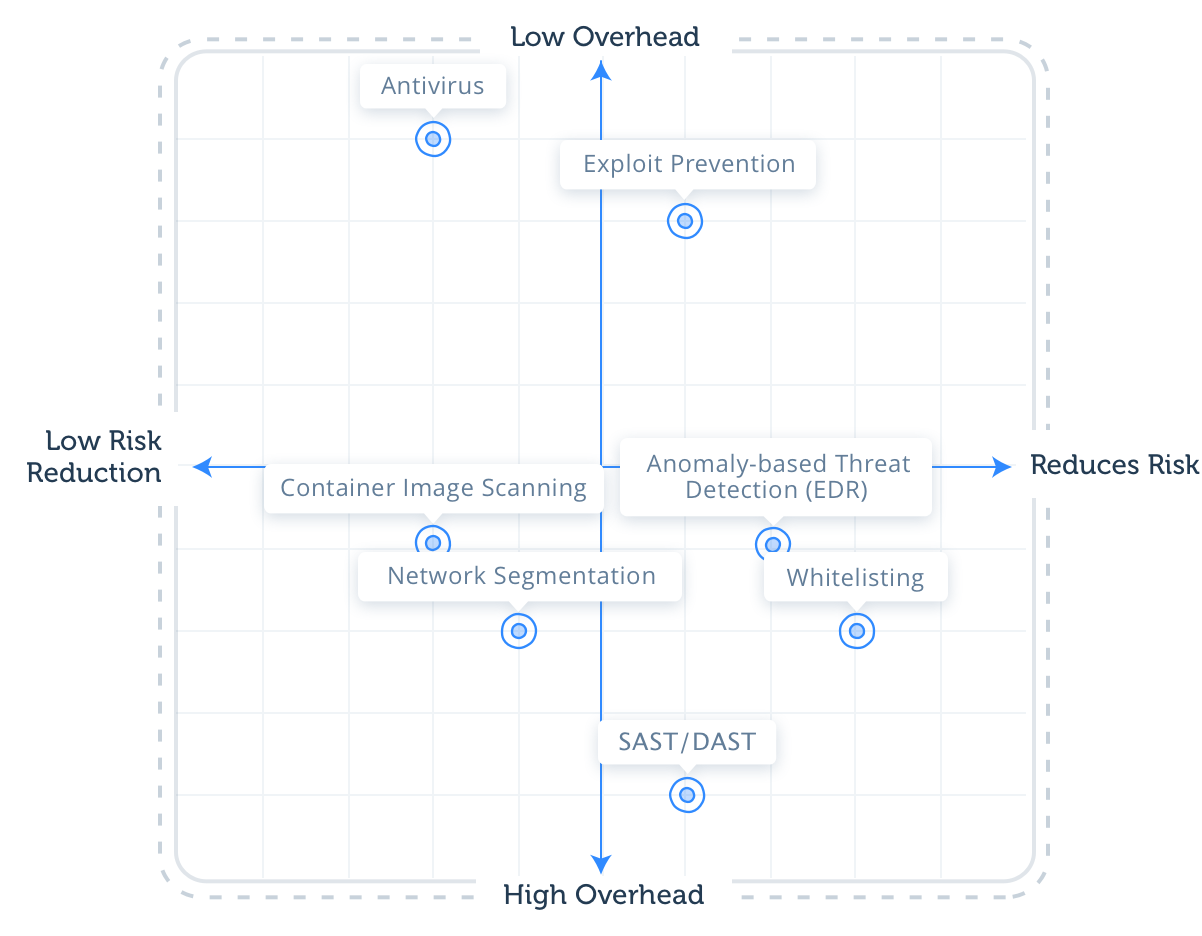

Market analysts tout the need for several CWP strategies to expand coverage against threats and secure the infrastructure (that is, ‘What’s in the Cloud’). We compared the most common CWP strategies to see which provide the best Return on Investment (ROI). The following quadrant scores the various strategies based on both their ability to reduce the risk of cyber attacks and the amount of operational overhead they produce.

Scoring Guide:

Reduces risk: 0 meaning the strategy reduces minimal to no risk. No CWP strategy scored lower than a 3.

10 designates the strategy reduces all risk. Understandably, no solution can eliminate 100 percent of threats, which is why no strategy received a score of 10. Whitelisting (also known as Zero Trust Execution or Application Control) received the highest risk reduction score of 8.

Overhead: 0 denotes the strategy produces a large amount of operational overhead, making it less desirable because it either produces too many alerts to investigate or requires manual configuration, policies, or rules. The lowest score given to a CWP strategy in this category is 1, largely due to an intrusive integration in the agile CI/CD pipeline, which CISOs report can sometimes be a two year process.

A score of 10 indicates the strategy produces very little to no overhead. Antivirus solutions lead the way in this category with a score of 9.

Below we provide an explanation for each score.

Reduces risk: 7

Anomaly-based threat detection, or Endpoint Detection and Response (EDR), alerts on unknown suspicious behavior. This approach prevents any suspicious activity that deviates from the behavioral baseline, which is why it scored high in the risk reduction category.

This strategy has its limitations because it can be evaded by attacks which are designed to mimic normal behavior. Threat detection based solely on behavioral indicators can provide a false sense of security if an attack doesn’t generate any noise, posing the risk that the threat can infiltrate the network without being identified.

Overhead: 4

Anomaly detection systems tend to produce a lot of false positives since they trigger alerts for almost all activities that behave suspiciously. While that may seem like an advantage, not all alerts that behave suspiciously are malicious. Examples of non malicious activities include a user logging in during non-business hours, new IP addresses attempting to connect to the network, or new devices being added to the network without permission. EDR solutions require constant refining of rules and policies to avoid false positives like these.

Alerts generated by EDR solutions are also often vague and lack context, meaning they require further investigative work from the analyst to determine if the alert is a real threat or a false positive, the former in which the analyst needs to discern the type of threat in order to respond properly. Another downside of EDRs is they can generate a great deal of telemetry which requires significant CPU % and bandwidth, which can cause diminished performance. It’s for these reasons this approach scored below average in the operational overhead category.

Reduces risk: 3

Antivirus solutions (AVs) score low in the risk reduction category mainly due to their inability to detect Linux threats. It’s no secret the antivirus industry is struggling to detect Linux threats. In a 2019 study conducted by researchers at Team CYRU, 78% or 6,931 known Linux threats were undetected by top-30 AV products. This is concerning considering more enterprises are adopting the cloud and Linux already accounts for 90 percent of the public cloud workload.

AVs do provide some benefit because they detect malware, which is a top concern of cloud security teams. Therefore, if an actual breach occurs, an antivirus should be able to detect it.

Unfortunately, not every attack on the cloud is malware-based. AVs won’t alert enterprises on malware-less attacks caused by Living off the Land (LotL) tactics, where attackers utilize trusted applications in the operating system to conduct an attack. Most AVs also won’t be able to detect the vast majority of in-memory threats, which occur when an attacker exploits a vulnerability in a trusted application and then injects malicious code into memory.

Overhead: 9

AVs typically produce low overhead in cloud environments because these environments are fairly static in comparison to endpoints. On the subject of false positives, the low detection rates of Linux threats actually produce little to no overhead, but only because most threats slip under the radar. AVs also usually don’t require setting any manual policies or rules because once installed by the cloud consumer, the AV vendor is responsible for continuously updating the signatures.

The overhead AVs do produce is mostly on the servers’ resources, as they are not designed to work in agile, scalable, and elastic environments, and consume a lot of computing power (CPU, memory), which slows down performance and is a downside for production environments.

Reduces risk: 3

Container Image Scanning is a pre-runtime process that scans your containers for known vulnerabilities. This is important because CVEs of well-known software can be exploited by attackers in the cloud. While Container Image Scanning does reduce the attack surface by making your applications more secure, it doesn’t detect breaches which occur in runtime. Not having visibility in runtime is problematic because it’s in runtime where the majority of cyber attacks occur. Container Image Scanning won’t detect unknown vulnerabilities either, which is a prominent attack vector in the cloud.

Another disadvantage of this strategy is it won’t scan for vulnerabilities in your own code. It will check for vulnerabilities in known software like Apache, for example, but it doesn’t account for vulnerabilities in your own internally developed software, which is what SAST/DAST is intended for (more about this approach later). Using Container Image Scanning to check for vulnerabilities in your containers will monitor only the third party’s imported libraries or applications.

Overhead: 4

Container Image Scanning scores just below average in the overhead category because it does require significant effort to fix vulnerabilities. Integrating this strategy into the CI/CD pipeline can also create some operational challenges because security teams need cooperation from the development team. Interfering with the CI/CD pipeline requires both buy-in and time from developers and/or DevOps engineers.

Reduces risk: 6

A major attack vector for infecting cloud servers is known and unknown vulnerability exploitation. If a vulnerability exploitation happens in runtime, Exploit Prevention will detect it. However, not all cyber attacks in the cloud require exploitation. An attacker can use a simple misconfiguration in the workload to run a malicious program and conduct an attack.

Overhead: 8

Exploit Prevention produces low overhead because it’s deployed as a ‘Set it and Forget it’ type of solution. A good Exploit Prevention solution shouldn’t generate many alerts because in theory there aren’t many false signs of exploitation in a fairly static environment such as the cloud. If this strategy detects an exploitation technique in memory, it’s probably true. This is dependent on the product, however, as there are vendors that alert on memory corruption which is a false positive.

Reduces risk: 4

The main function of Microsegmentation is to deny attackers the ability to move laterally between servers and exfiltrate data. If an attacker hacks an enterprise’s server, the attacker isn’t able to infiltrate or move to another server. This strategy acts like a granular firewall that doesn’t allow communication between nodes. While reducing the attack surface can both limit and frustrate hackers, attackers can still gain access to a server using certain vectors to find ways within the allowed segment or rules to operate, exfiltrate data, and move laterally even with Microsegmentation in place.

Overhead: 3

Microsegmentation acts as a default-deny list for network traffic by design but this can cause some instances where a legitimate communication between containers or workloads is blocked. This typically requires a manual policy update to redefine what server(s) can communicate with another workload. This is yet another process which can be cumbersome for DevOps, security, and development teams.

Reduces risk: 6

We will preface this explanation by saying SAST/DAST isn’t exactly a CWP strategy.

SAST/DAST stands for Static or Dynamic Application Security Testing. We included it in this article because it’s mainly used by developers to check for vulnerabilities in their own code and therefore it does address some significant aspects of securing cloud applications.

SAST/DAST scores above average in the risk reduction category, but not higher, for a few reasons. For one, vulnerability exploitation is not the only attack vector and attackers can find other ways into the server, such as through a misconfiguration. In addition, attackers can exploit vulnerabilities that were not detected by SAST/DAST, or exploit vulnerabilities in the container or Virtual Machine, which is unrelated to the developers’ own code.

Most importantly, SAST/DAST only scans for vulnerabilities in pre-runtime, which means malicious code injected into memory following a successful exploit won’t be detected in runtime. This approach won’t detect Living off the Land (LotL) tactics, which occur when an attacker utilizes a legitimate application in the operating system to gain access to the environment. Overall, SAST/DAST is an important solution to have because it reduces the attack surface. However, it shouldn’t be counted on as the main layer of CWP because of the aforementioned challenges.

Overhead: 1

Some CISOs report fixing vulnerabilities in your applications before moving them into production can be a two year process. This requires an intrusive integration with the CI/CD pipeline which is time consuming and more complex than patching vulnerabilities in the container or operating system.

Learn more about the advantages of runtime vs. pre-runtime protection in this SANS webcast

Reduces risk: 8

Whitelisting (also known as Zero Trust Execution or Application Control) is widely considered to be an essential CWP strategy, due to its ability to automate the restriction of malicious, untrusted, and potentially unwanted applications (PUAs). Whitelisting serves as a default-deny, immediately blocking any deviation from the baseline.

The downside to whitelisting is it doesn’t provide complete attack coverage, most notably against Living off the Land (LotL) tactics and in-memory threats, the latter which takes place when an attacker exploits a vulnerability in a trusted application and injects malicious code into memory. This is why market analysts recommend pairing App Control with memory protection capabilities to gain full coverage in runtime.

Overhead: 3

Nearly all App Control solutions are not flexible to signature changes, meaning they block any new software upgrade or update that is not the exact same hash. This produces many false positive alerts. Whitelisting also creates strict and often unrealistic policies, as it can be tedious for security to ask the DevOps team to unblock an application after every minor update.

The different Cloud Workload Protection strategies each present advantages and disadvantages. It’s for this reason market analysts recommend cloud consumers utilize several CWP strategies to expand their threat coverage and reduce overall risk. While reducing the risk of breaches is paramount, understanding how much overhead is generated by your CWP vendor can be equally important for taking some of the burden off your teams involved in security and getting the most return on your investment.

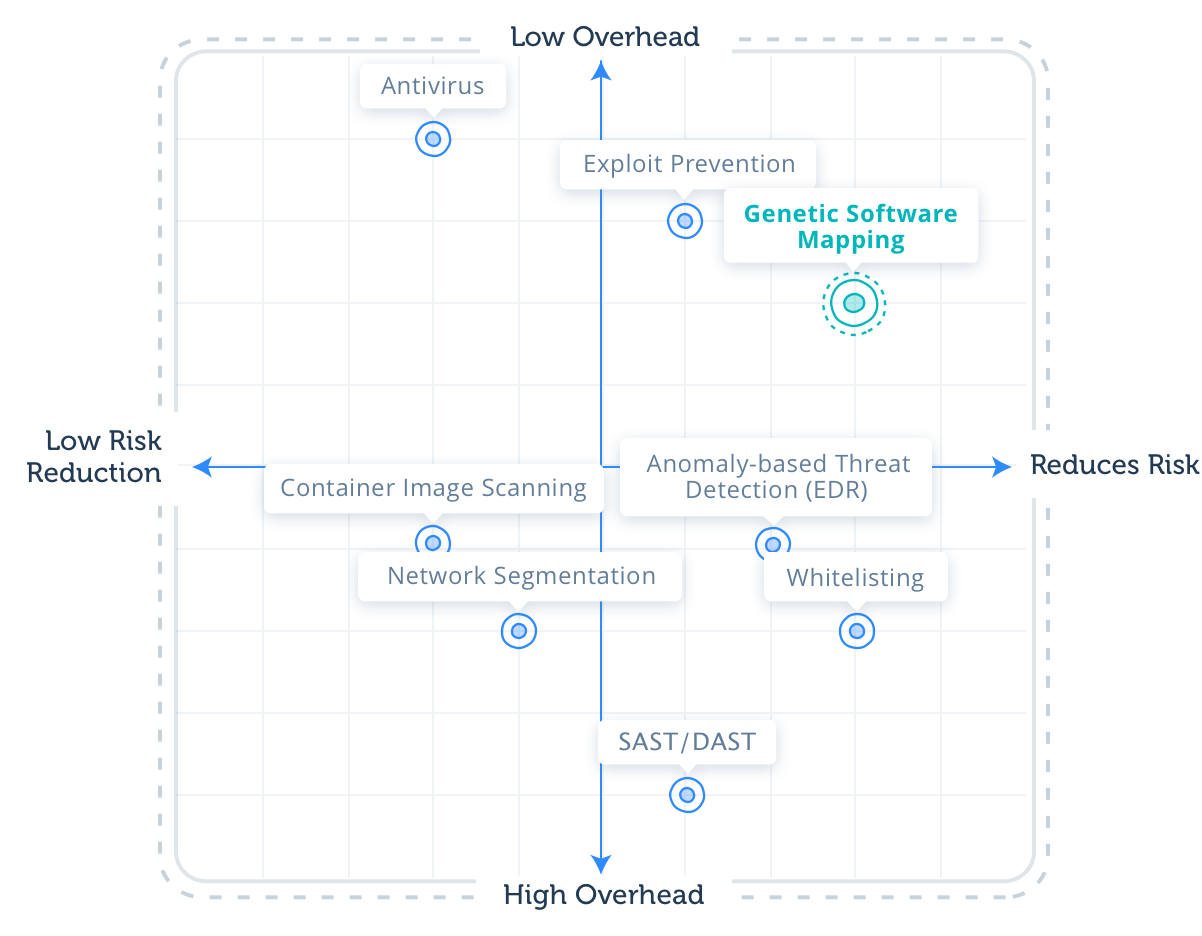

At Intezer, we incorporate a Genetic Software Mapping approach to Cloud Workload Protection that integrates many of these strategies into one solution. More about this approach and where it falls on the quadrant below.

Genetic Software Mapping:

Reduces risk: 8

Genetic Software Mapping classifies unknown code and applications by divulging the code origins of these threats.

This technology can help enterprises adopt a Zero Trust Execution strategy—which scored high in the risk reduction category—with in-memory capabilities to provide full visibility over what code and applications are running in their systems. Genetic Software Mapping’s ability to provide a combination of Zero Trust Execution and memory protection makes for a strong defense against a wide scope of attack vectors, including malicious code, unauthorized or risky software, exploitation of known and unknown vulnerabilities, and other fileless threats.

Overhead: 7

With this approach, any detected deviation from the baseline is inspected on the code level, which alerts only on deviations that present true risk, rather than natural deviations such as legitimate software upgrades that don’t require a response. As a result, this approach enables enterprises to adopt a Zero Trust Execution strategy without being subject to the high maintenance or false positives. Additionally, because this technology is based on code reuse rather than signature changes or behavioral indicators, it produces only high-confidence and contextualized alerts, including origin of code and malware family.

Don’t choose between good threat detection and low SecOps maintenance and overhead